When traveling around between studios or taking your home recorded project to a professional studio to be mixed or mastered there are a few things that you should consider prior to booking your appointment. You’ll need to make sure your hard drive is compatible with the studio computers and your DAW session file is readable by the studio’s system. Many audio software programs such as ProTools routinely change their file type and the newest format may not be available when you get there. Furthermore, organization is key in a good workflow and if your new engineer has to spend time getting all the files aligned he might lose the vibe of the song or maybe the session altogether. I have also seen countless hours of valuable recording time wasted on re-consolidating files, searching for bounced files, and missing plugins. Here are a few tips to keep your session flowing. If you are also tracking instruments be sure to properly prepare for your recording session.

Call ahead

Call your studio ahead of time to see what the best way to get files to them is. Many recording studios provide a checklist or “todo” list for new clients or for session files that are inbound.

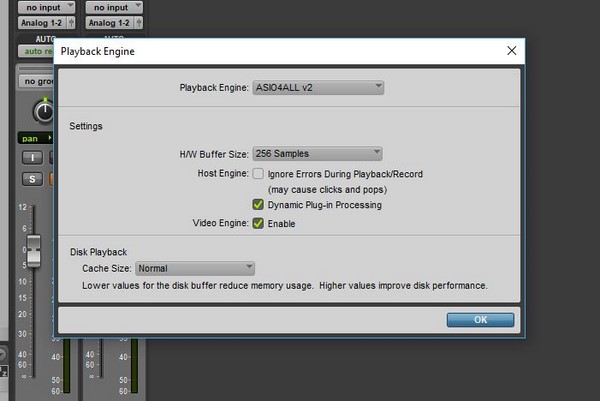

Check Software Version

DAW users may need to save their session as an earlier format. For ProTools this can be done by performing a “Save Copy As” and then choose the version needed. You will only be able to change to the formats supported by your version of ProTools. Make sure you check the “All Audio Files” box.

Sample Rates and Audio Formats

Request the studio’s preferred sample rate. This is more critical with audio for film and television. If necessary you may need to do a conversion of you audio files. Most studios can handle 96K sample rate at 24bit. It is unlikely that a home user would be using something larger than a 48k session but check with you engineer to be certain

Consolidation of Audio Files

If you are using a different DAW than the studio then all audio tracks (regions/clips) need to be

consolidated with no edits and have the same start time (00:00:00). Export these new files as .AIFF or .WAV in the highest resolution possible and then label accordingly. Do NOT make an MP3 of these files and send to your engineer. You should keep these files at highest audio fidelity possible. Consolidating files makes it easy for your studio engineer to drop the entire audio files into a new session. If they all have the same start time everything will line up perfectly.

Preserve Plugins and Effects

If the sound you’re getting from a plugin or effect is vital to the song you should print the

effected audio to another audio track so it can be recreated without the use of the plugin. Check to see what plugins are available at the studio. Some plugins may not load but preserving the finalized audio into a track of it’s own will allow for that sound to always be recalled.

Preserve Virtual Instruments and MIDI Data

Virtual instruments including any audio coming from programs that are rewired such as Reason should also be printed and consolidated to a new audio track. MIDI information for instruments is important to retain in the event that sound replacement needs to be done. It’s also likely the studio will have high quality virtual instruments so maintaining MIDI data from home would be a good idea.

Make a Working Copy “Save As”

Make a copy of the session file for your new engineer to work with. I like to suggest a new session with just consolidated audio and takes that you need to work with. If necessary, your engineer can go back to an older session copy to re-print an instrument or find another take of a track. You also want to make sure you are preserving your file from time to time. Make a copy on a disc and put it in a save place.

Preserve Tempo and Click Tracks

Provide information regarding the BPMs (beats per minute) of all songs if recorded with a click/metronome. Having general notes like this for any song is a good idea. I usually recommend that click tracks are printed to an audio file once the final take of the song is recorded and all timing adjustments are locked in. This ensures a copy of the timing and click are always available in case something goes wrong with the grid or tempo of the session.

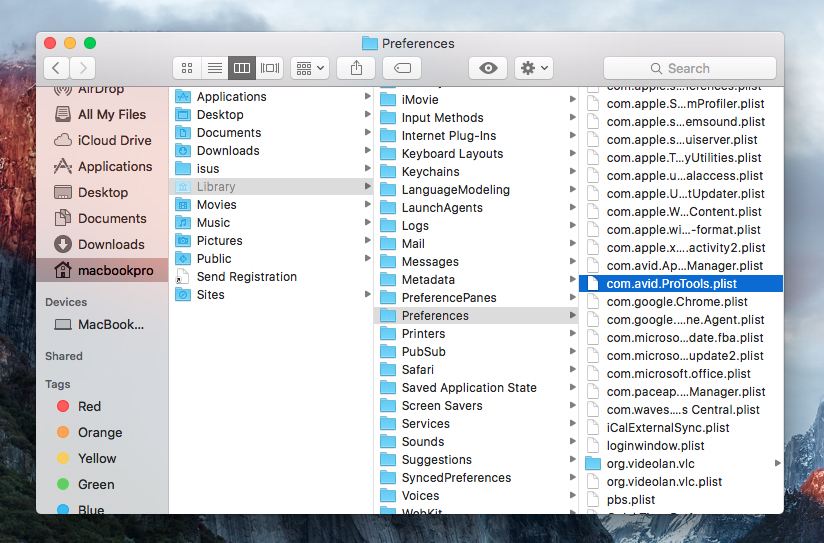

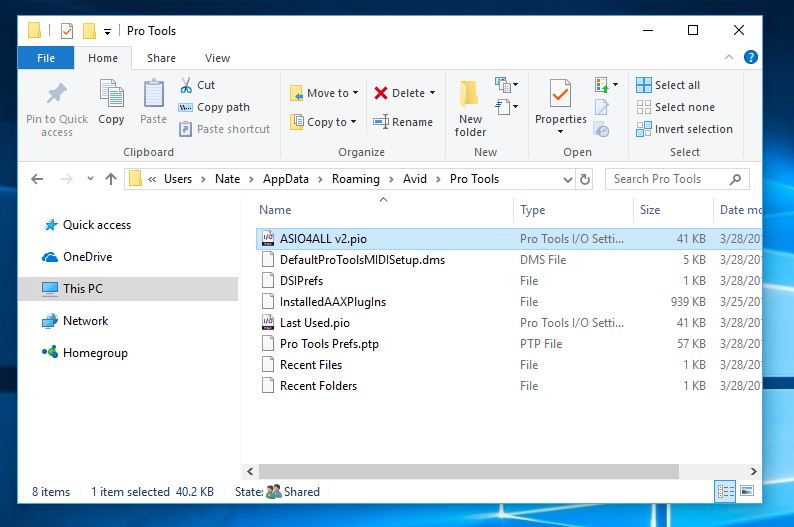

Hard Drive Formatting and Transferring

If you plan to work directly off your hard drive be sure to check what file system you should be using. Taking a MAC formatted drive to a PC based studio will not be usable. Also be sure to ask about connectivity for drives. Firewire 400/800, USB 2/3, Thunderbolt are all possible options. Optical media such as CD or DVD discs and USB flash drives are good ways to physically transfer session files but will require being placed onto another hard drive to work from. Your studio may provide a hard drive so it’s best to clarify with them first.

Organize

Make the proper notes for your sessions and songs. The ProTools comment box is a useful tool for making quick tidbits about that specific tracks. I like to include a simple .txt document within my session folder that has basic information such as recording dates, microphones used, external processing, tempo etc. Nowadays I also attach digital photos if I feel necessary. Providing a lyric sheet is very useful for your engineer and producer when recording vocals and mixing.

Deliver Early

Send your files ahead of time if your studio allows it. This will give your producer or engineer a chance to look at the session saving you valuable time later on. Fixing problems before you get to the studio will save you the headache and a ton of cash. There’s a good chance the engineer will not charge you anything just to check things over. After all, it will save them the headache of fixing everything on the day of the session. If your files are a real mess, you might want to budget some time to allow for the organization of your files prior to tracking or mixing your sessions.

Home theater component and 7.1 surround systems have been lacking for years due to having to sacrifice quality for the ease of wireless transmission. This is still not fast enough to comfortably support live performance or studio applications such as in-ear monitors or audio hardware where engineers rely on zero latency. However, quality wireless transmission of multi channel audio with zero latency is not too far away. Obviously this is great if you rely on Bluetooth solely for data transferring. Sending wirelessly between computers or from your camera to laptop can now be accomplished in half the time.

Home theater component and 7.1 surround systems have been lacking for years due to having to sacrifice quality for the ease of wireless transmission. This is still not fast enough to comfortably support live performance or studio applications such as in-ear monitors or audio hardware where engineers rely on zero latency. However, quality wireless transmission of multi channel audio with zero latency is not too far away. Obviously this is great if you rely on Bluetooth solely for data transferring. Sending wirelessly between computers or from your camera to laptop can now be accomplished in half the time.

systems and sound systems that were installed by a contractor tend to have affected equipment even if it was installed after the FCC’s announcement. This is likely due to supply houses and contractors trying to unload inventory before they themselves end up with nothing much more than an expensive paper weight. I saw this with the 700MHz reallocation a few years ago and am seeing it again now. Make sure to check you wireless frequencies and budget to replace if needed. Luckily the 3 largest manufactures offer trade-in rebates on affected wireless systems. You must have proof of purchase and file for the trade-in rebate before the deadline. Sennheiser’s deadline is June 30th, 2018 and Shure’s deadline is July 30th, 2018. Audio Technica’s deadline for rebate is set for sometime in 2019. You can refer to each manufacturer on trade-in specifics and how to collect your rebate.

systems and sound systems that were installed by a contractor tend to have affected equipment even if it was installed after the FCC’s announcement. This is likely due to supply houses and contractors trying to unload inventory before they themselves end up with nothing much more than an expensive paper weight. I saw this with the 700MHz reallocation a few years ago and am seeing it again now. Make sure to check you wireless frequencies and budget to replace if needed. Luckily the 3 largest manufactures offer trade-in rebates on affected wireless systems. You must have proof of purchase and file for the trade-in rebate before the deadline. Sennheiser’s deadline is June 30th, 2018 and Shure’s deadline is July 30th, 2018. Audio Technica’s deadline for rebate is set for sometime in 2019. You can refer to each manufacturer on trade-in specifics and how to collect your rebate.